Culture

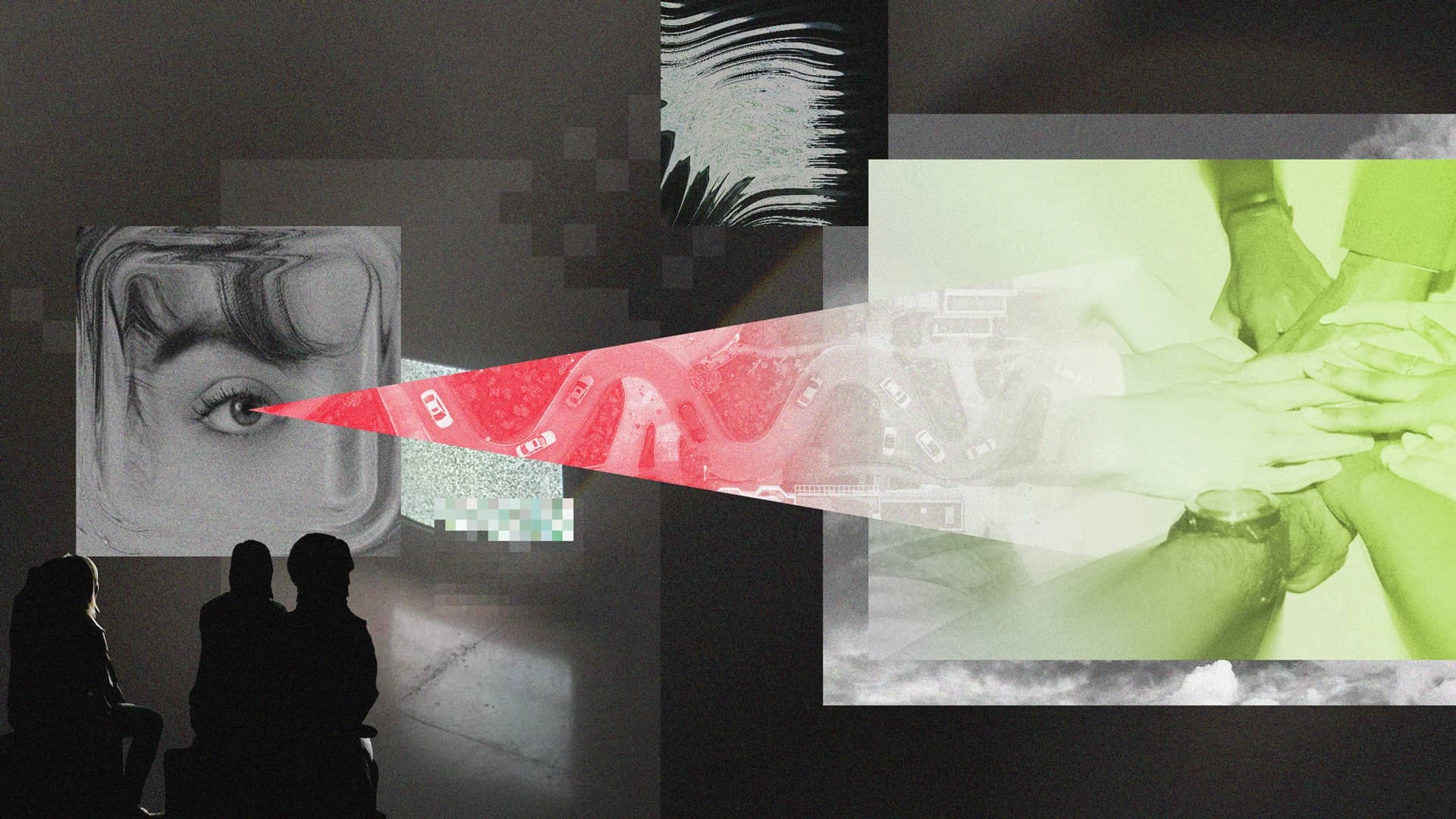

It’s OK to See Red: The Hidden Value of Negative Experiment Results

Learn more

TL;DR:

Marketing experimentation isn’t just a buzzword. It’s a strategy that directly connects your marketing efforts to business outcomes, driving ROI and improving conversion. Done right, experimentation allows brands to collect as much data as possible, make data-driven decisions, uncover what works, and maximize the impact of their resources.

Utilizing customer data is crucial in this process, as it helps identify shopping patterns, segment audiences, and tailor marketing strategies effectively. But, as with all good things, there’s a science to getting it right. Marketers can unlock opportunities most organizations overlook by adhering to rigorous methodologies, maximizing experiment volume, and focusing on meaningful changes.

Marketing experiments are a cornerstone of digital marketing, enabling businesses to test and measure the effectiveness of various strategies, tactics, and channels. Companies can gain invaluable insights into what resonates with their audience and what falls flat by conducting these experiments, allowing for data-driven decisions that optimize marketing efforts.

Whether it’s conversion rate optimization, refining landing page designs, enhancing email marketing campaigns, or boosting social media engagement, marketing experiments provide a structured approach to understanding user behavior and improving conversion rates. In essence, marketing experiments help businesses move beyond guesswork.

By systematically testing different elements such as headlines, images, calls to action, and more, marketers can identify the most effective combinations that drive desired outcomes. This iterative process enhances the performance of individual web pages and campaigns and contributes to a more robust and effective overall marketing strategy.

At its core, marketing experimentation is about learning. While it may sound simple, it does require a fundamental shift in how teams approach campaigns and strategies. It’s not just about launching an email or tweaking an ad—it’s about running measurable tests that allow you to analyze relationships between your efforts and business outcomes.

When marketing experiments are designed and executed well, they provide clarity on incremental impact. This clarity allows brands to not only validate their efforts but also focus their resources in the most effective way to deliver ROI. Incorporating conversion optimization into these experiments can further enhance user engagement and maximize website effectiveness.

Historically, however, many marketers focus solely on winning experiments—a dangerous misstep given that even leaders in experimentation, like Netflix and Microsoft, report success rates as low as 10% to 33% for their tests. The real value of experimentation is in the learning it generates, even when an experiment “fails.”

Setting up a marketing experiment begins with a clear hypothesis. This hypothesis should be specific and measurable, providing a solid foundation for the experiment. Next, identify the variables to be tested, such as different versions of a landing page or variations in email subject lines. It’s crucial to determine the metrics that will be used to measure success, such as conversion rates, click-through rates, or engagement levels.

Next, running your experiment will require a well-built experimentation platform like Eppo to randomize your users between variations. This ensures that any observed changes can be attributed to the variables being tested. Businesses can leverage various tools and platforms to set up and run experiments, including A/B testing software, multivariate testing tools, and comprehensive analytics platforms. These tools help streamline the process, making it easier to manage and analyze the results. Advanced experimentation platforms will also help target experiments based on specified consumer attributes when you’re interested in learning about the impact of a change on a specific segment.

It’s tempting to think of marketing experimentation primarily as A/B testing - but not every question that crosses a CMO’s mind can be A/B tested. Take a question of budget allocation: how much of our ad spend should go towards YouTube vs. TikTok? Or say you want to run a billboard campaign - we can’t randomize who does and doesn’t see a billboard.

This is where the toolbox expands to quasi-experimental methods like geolift testing, which allows organizations to measure the impact and efficiency of paid campaigns and channels by randomizing similar geographic regions and comparing localized rollouts.

This level of rigor supports informed decision-making about where and how to invest budgets for maximum conversion improvement.

When designing and running marketing experiments, several key factors must be considered to ensure reliable and actionable results. One of the most critical factors is sample size. Simply put, a larger sample size helps us better distinguish between “signal” and “noise” (or in technical terms, helps us better power our tests, statistically-speaking),, though it may also increase the cost and complexity of the experiment. It’s essential to strike a balance between obtaining meaningful data and maintaining practical feasibility.

The duration of the test is another important consideration. The experiment should run long enough to capture sufficient data and account for seasonality, but not so long that it becomes impractical or costly.

When we want to look for our changes to drive notable differences, we often look for statistical significance as a measurement of how surprising our results would be if a null hypothesis (no difference) were true.. By carefully planning the statistical design of our experiments,, businesses can run robust tests that yield valuable insights.

A recurring problem in marketing experimentation is cutting corners on scientific rigor, which can lead to skewed results and misinformed decisions. For instance, if imbalances in traffic distribution between test groups aren’t detected early, weeks of effort can be wasted. Similarly, relying on small sample sizes can exaggerate early results, leading to overconfidence in campaigns that ultimately underperform. Conducting the same experiment across different markets can help validate hypotheses and share results, ensuring a consistent methodology.

To prevent these challenges, it’s essential to build experiments on a foundation of trustworthy, clean data. That “single source of truth” ensures that metrics in a marketing dashboard match those used across the organization, from product teams to executives. Additionally, marketers need to lean on tools like sample-size calculators and implement real-time traffic monitoring to catch issues early, saving time and resources.

Running a high volume of marketing experiments might sound daunting, but it’s a critical step in generating the learnings needed to optimize ROI. The reality is that most experiments won’t generate lift, so getting results means increasing the number of tests conducted. Booking.com serves as a gold standard for this. Their approach focuses on equipping all team members, not just data experts, with the tools to design, execute, and analyze experiments.

One simple but highly effective solution? Create repeatable defaults. For example, standardize metrics for common experiments (such as purchase conversion rates for checkout flows) and automate processes like rollout strategies. This removes bottlenecks and accelerates experimentation cycles. Equally important is the adoption of self-service analytics tools, reducing reliance on specialized teams for evaluating results.

While maximizing experiment volume is crucial, it’s equally important to swing for the fences occasionally. Introducing strategic elements on a sales page, such as customer testimonials, can significantly influence visitor trust and conversion rates. Small, incremental changes—swapping a button color or tweaking messaging slightly—sometimes yield negligible improvements.

Significant results often require bold moves. However, taking bigger risks doesn’t mean acting recklessly. Building guardrails within your experimentation program ensures that even high-stakes tests come with manageable downside risks. For instance, a company might set clear thresholds for negative customer impact, such as limits on increased support ticket volume.

Real-world examples showcase just how effective experimentation can be:

Analyzing and interpreting the results of marketing experiments requires a blend of statistical knowledge and business acumen. Statistical methods help determine whether the results are significant, providing a foundation for informed decision-making. However, it’s equally important to interpret these findings within the context of the broader marketing strategy and business goals.

Marketers should consider the limitations and potential biases of the experiment, such as sample size constraints or external factors that may have influenced the results. By critically evaluating the data, businesses can draw meaningful conclusions and apply these insights to future marketing efforts. This iterative process of testing, learning, and optimizing is key to achieving sustained success in digital marketing.

Once the results of a marketing experiment are analyzed and interpreted, the next step is to implement the changes and measure their impact on digital marketing ROI. This may involve adjusting the design of landing pages, refining email marketing campaigns, or optimizing social media ads based on the insights gained from the experiment. By continuously testing and refining their marketing efforts, businesses can improve conversion rates, increase revenue, and achieve a higher return on investment (ROI) from their digital marketing campaigns.

Measuring digital marketing ROI involves tracking key performance indicators (KPIs) and comparing them to the baseline metrics established before the experiment. This ROI calculation helps businesses understand the effectiveness of their marketing investments and make data-driven decisions to allocate resources more efficiently. By embracing a culture of continuous experimentation and optimization, companies can drive significant improvements in their marketing performance and overall business outcomes.

To implement effective experimentation strategies that bridge marketing tactics to business outcomes, consider the following tips:

By building a culture of rigorous marketing experimentation, marketers can reliably connect their efforts to measurable business outcomes. Experiment boldly, learn continuously, and watch as your ROI optimization efforts deliver visible improvements in conversion and impact.